Facebook advertising evaluates advertisers not through a single isolated ad, but through the account’s operational behavior over time. Content, editing behavior, payment history, and responses to reviews are all recorded as signals to assess credibility. When these signals deviate from standards, accounts easily fall into a restricted state without a clear warning. Therefore, compliance with Facebook ad guidelines must be positioned correctly: not just to pass reviews, but to keep the entire advertising system functioning seamlessly. A sustainable advertising strategy always starts with implementation discipline, where compliance with Facebook ad guidelines becomes an operational principle rather than a reactive reflex after risks have occurred.

By what mechanism does Facebook’s ad policy control accounts?

Facebook does not control ads through manual individual reviews but operates a multi-layered evaluation system where policy is only the surface level. Behind it is a network of algorithms continuously collecting signals from ad content, landing pages, account history, and operational behavior. As a result, many advertisers mistakenly believe that being “policy-compliant” in terms of wording is sufficient, whereas in reality, Meta’s control mechanism is comprehensive and continually evolves.

How the automated review system works

As soon as an ad is created, Facebook’s system scans the content using natural language processing and image recognition technology. The algorithm does not just read words literally but analyzes context, the nuance of claims, and the level of impact on users. Keywords that affirm results, promise personal gain, or directly affect finances and health are often assessed at a higher risk level, even if they do not explicitly violate policies.

Parallel to the ad content, the system continues to monitor user reactions after the ad is delivered. Report rates, negative feedback, time spent on the landing page, or high bounce rates are all considered quality signals. When these signals accumulate negatively, account surveillance increases without public notice. This is why many accounts have their ads approved initially but are restricted after a few days of running, despite no changes in content.

The connection between content, landing pages, and accounts

Facebook does not evaluate each factor in isolation. Ad content, landing pages, and accounts form a logical chain. If an ad uses neutral language but the landing page contains misleading claims, the system still records it as a violation at the holistic level. Conversely, a perfectly built landing page paired with ad content that creates pressure or excessive incitement to act can also bring risks to the entire account.

Beyond displayed content, website structure, page load speed, transparency of business information, and terms of use all affect credibility. Landing pages lacking basic legal information or constantly changing content across campaigns are often judged as unstable. When this instability repeats, the ad account is placed into a more closely monitored group, even if individual campaigns are still approved.

The difference lies not just in the content itself but in the account’s “resume.” Every ad account has a trust profile built over time based on spending history, violation frequency, compliance levels, and responses to previous warnings. Content deemed acceptable on a high-trust account can still trigger restrictions for a new account or one with a history of violations. Additionally, the operational environment plays a vital role. Accounts that frequently change payment methods, login devices, or show unusual administrative behavior will be assessed as higher risk. When combined with ad content in sensitive policy areas, the likelihood of an account ban increases significantly. This explains why, with the same ad, one business can run stably for a long time while another is restricted after only a few deliveries.

Facebook’s control mechanism is not aimed at immediate ad removal but focuses on long-term filtering and behavior adjustment. Understanding how this system works helps advertisers build content strategies and account operations toward sustainability, rather than just focusing on crisis management after incidents occur.

Policy groups most likely to cause account bans

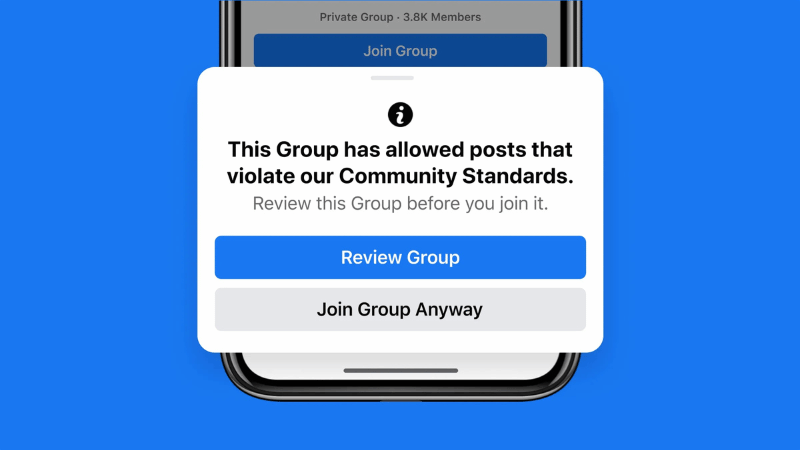

When deploying Facebook ads, most cases of account restriction or disablement do not stem from technical errors but from directly touching high-control policy groups such as the Special Ads Category. Meta not only evaluates individual ads but also monitors repetitive behavior, content phrasing, and the cumulative risk level of the entire account. Therefore, if a violation group appears repeatedly, the account will quickly be placed under tighter surveillance.

Content deemed misleading or over-promising

Facebook is particularly sensitive to content that creates a sense of certainty about results, especially when ads relate to finance, health, education, or personal improvement. Automated review systems are trained to detect high-affirmation phrases that lead viewers to believe results will occur in an almost absolute manner.

Review data shows that accounts using messages tending toward “guaranteeing,” “certain to achieve,” or describing results within specific timeframes are often judged as misrepresenting user expectations. Even if the product or service has actual capability, delivering information by imposing results is still viewed as a risk. Meta prioritizes content describing the process, utility value, and general experience rather than focusing on the final result.

In addition to the main ad content, the headline, short description, and landing page are all cross-referenced. If there is even one inconsistency, the system marks the ad as suspicious. This explains why many campaigns are rejected even after the ad content is edited, because the landing page retains its old phrasing.

Sensitive industries and additional control requirements

Certain business sectors always fall within Facebook’s special control zones due to direct links to financial interests on Facebook ads, personal information, or high-risk consumer behavior. These industries are not completely banned but must meet strict additional conditions regarding content, targeting, and legal profiles.

Review systems often flag ads early in categories like finance, investment, credit repair, cryptocurrency, weight loss products, invasive beauty services, or personal consulting forms. In these cases, the ad account is not only reviewed at the content level but also evaluated for operational history, Page credibility, and the transparency of the underlying business.

Operational reality shows that with the same ad content, the restriction rate is significantly higher when run on new accounts or those without a stable history. This stems from Meta’s risk management mechanism, where sensitive industries are always assigned higher-than-normal monitoring coefficients. If an account does not provide enough trust signals, an early ban is almost a predictable scenario.

Common errors in images, language, and CTA

Many advertisers focus on editing text but overlook images and calls to action (CTA). In practice, these two components frequently trigger Facebook’s automated filters. Strongly comparative images, which highlight drastic changes or create a sense of direct impact on the user’s body or finances, are often assessed as unsafe.

Regarding language, using excessively personalized addresses, directly implying the viewer’s problems, or exploiting psychological weaknesses easily leads to indirect targeting policy violations. Facebook does not allow ads that make users feel monitored or “read,” even if the content does not mention specific personal data.

The CTA is also an area prone to risk if it is coercive, creates a false sense of urgency, or promises benefits beyond verification. Overly aggressive calls to action often cause the system to judge the ad as having manipulative elements, thereby directly affecting the account’s quality score.

How to comply with Facebook ad guidelines from the setup stage

Most ad account bans do not originate from serious violations but from minor discrepancies appearing at the initial setup stage.

A core principle in setup is ensuring absolute consistency between the ad and the ecosystem outside the ad, specifically the website. When ad content conveys one message, but the landing page expresses something else, the system views this as a misleading signal and immediately raises the account’s alert level.

Standardize ad content and landing pages from the start

Facebook does not evaluate ads in isolation. Every word, image, and CTA is cross-referenced with website content. Discrepancies that seem small, such as information on price, offers, or shipping policies, are common reasons for account flagging. When an ad promises a favorable condition but the checkout process reveals additional costs or unmentioned terms, the system considers this misleading behavior, regardless of the business’s intent.

Most violations appear in the text content of the ad. Phrases with high commitment, describing certain results, or excessively shortening the time to achieve goals are often seen as unsafe. Facebook is particularly sensitive to phrasing, as even a single word of strong affirmation is enough to trigger an automated filter. In practice, many campaigns have been “saved” simply by adjusting one phrase in the content, even though the overall message remained unchanged.

Warm up new accounts to build trust signals

A common mistake is deploying ads immediately with a new account, especially in rapid product testing or dropshipping models. New accounts without an operational history usually do not receive high levels of trust from the system. Creating and using multiple accounts consecutively in a short time further increases the risk of a negative assessment.

The account warming process should be seen as building credibility for a new entity. Organic activity, business verification, and linking to personal accounts with stable histories are all important signals that help Facebook judge an account as “real.” Some strategies also use low-budget engagement campaigns to generate positive behavioral data, thereby reducing review pressure when starting conversion ads.

Control risk by separating account systems

Facebook can link accounts through browsers, devices, or login behavior. When one account encounters an issue, related accounts may face a chain reaction. This is why keeping accounts separate becomes a key factor in risk management. Beyond technical factors, creative quality plays a large role in maintaining a safe status.

Shocking images, direct comparisons, or over-promising often lead to accounts being assessed as high risk. Facebook favors content describing experiences, usage contexts, and general value rather than emphasizing specific results. Experience shows that by simply changing the phrasing in the CTA or payment description, many previously flagged ads can run normally again.

Keep payments and verification in a “clean” state

The payment system is one of the most sensitive points. Issues related to cards, failed transactions, or payment methods previously linked to banned accounts can cause new accounts to be restricted immediately. Linking multiple reliable payment methods helps reduce the risk of disruption when Facebook charges fees on a cycle.

Simultaneously, alert management plays a crucial role. When an ad is rejected, resubmitting the same content often causes the system to record it as an intentional attempt to bypass review. A safe process always starts with pausing, identifying the cause, and thoroughly editing before resubmitting.

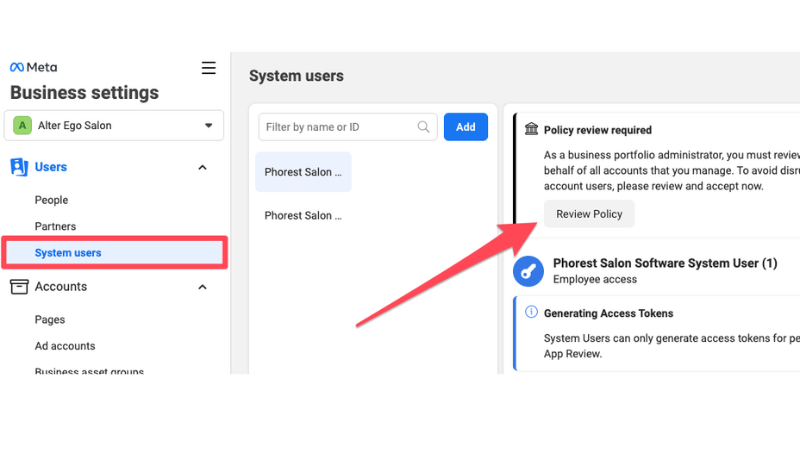

Manage access rights with a hierarchical structure

Business Manager, or Business Center, is where all advertising assets are stored. The hierarchical structure of Facebook Ads shows that the higher the level, the greater the risk. When a Business Manager is banned, all associated ad accounts and assets lose usage rights. When individual advertising rights are restricted, the ability to deploy campaigns nearly ends.

Verifying the Business Manager helps the business prove legal ownership in case of incidents. At the same time, verifying personal identity as an advertiser helps Facebook clearly identify the person behind the system. Combined with two-factor authentication and strict access role management, the advertising system significantly reduces the risk of unauthorized access or accidental bans.

At a long-term operational level, these setups are not just to avoid account bans but to create a stable foundation for ads to scale without constantly facing policy risks.

Frequently Asked Questions

Continually creating new ads to evade rejection usually makes the situation worse. Facebook tracks behavioral patterns, not just ad IDs. If the content, landing page, or CTA fundamentally remains the same, the system will still recognize it and consider it an attempt to circumvent review. The safe approach is to stop the ad, adjust the message to reduce commitments, clarify website information, and then resubmit. This helps the account send a signal of compliance rather than confrontation.

Compliance with policies is not a fixed state but a continuous process. Facebook policies change frequently, as do the system’s risk evaluation methods. Content that was accepted previously may become more sensitive later. Furthermore, scaling budgets too quickly, adding multiple new admins, changing payment methods, or changing the destination domain can trigger the system to re-evaluate the entire account. When the risk score exceeds the threshold, enforcement action occurs almost immediately.